bagging predictors. machine learning

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling. Understanding the Importance of Predictors.

We are a community engaged in research and education in probability and statistics.

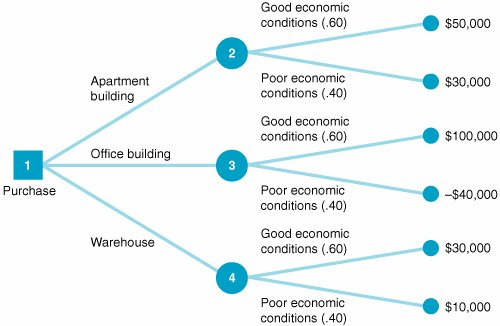

. It attracted the interest of scientists from several fields including Machine Learning Statistics Pattern. Supervised machine learning Unsupervised learning Semi-supervised learning and Reinforcement learning are the four primary types of machine learning. Recall that one of the benefits of decision trees is that theyre easy to interpret and visualize.

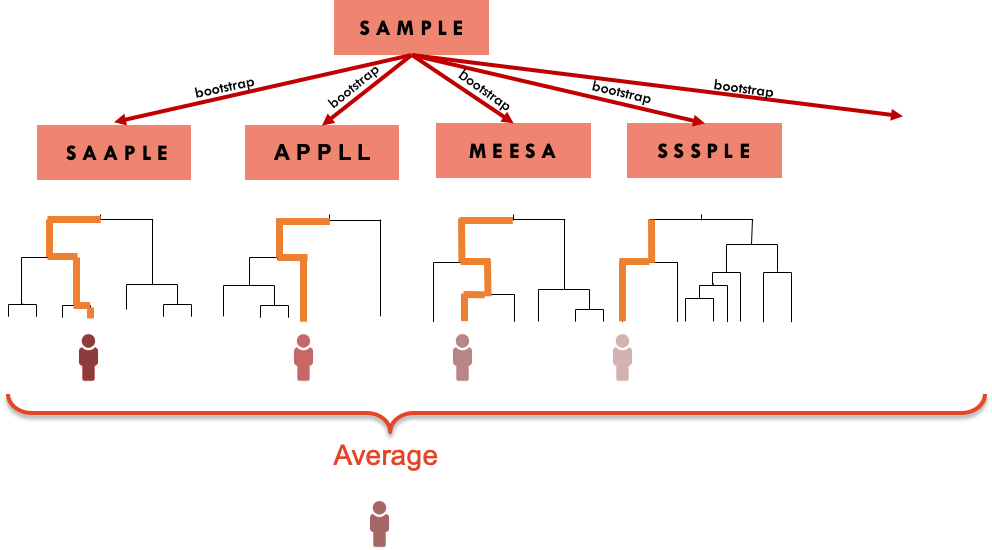

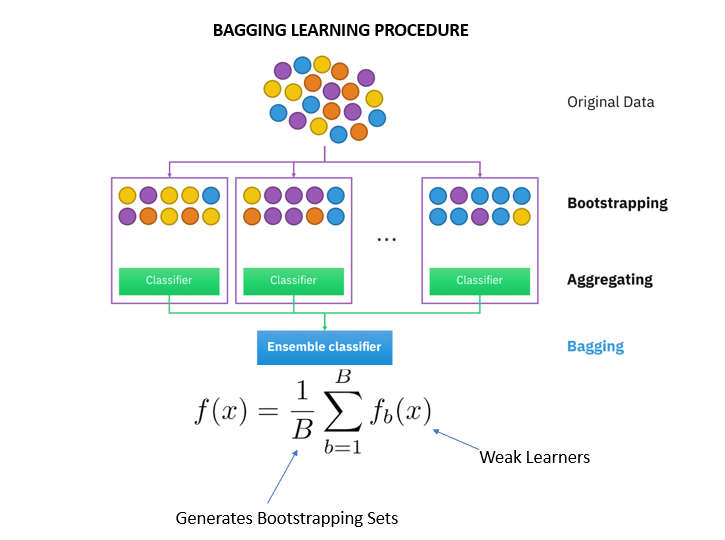

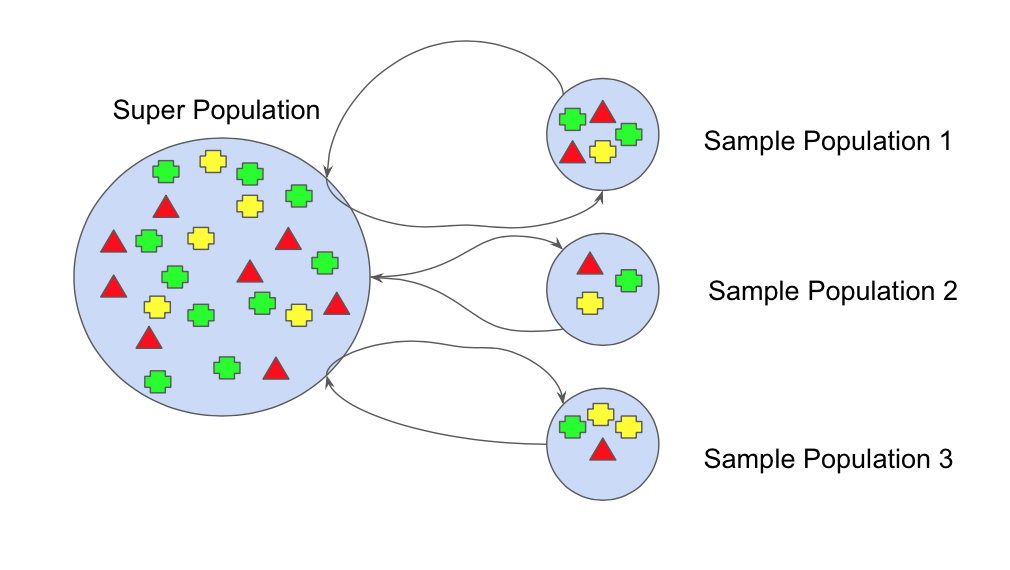

One Machine Learning algorithm can often simplify code and perform bet ter. In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. The retrieved data passed to machine learning model and crop name is predicted with calculated yield value.

Several machine learning methodologies used for the calculation of accuracy. The website boasts that it uses more than 500 predictors to find customers the perfect date but many costumers complain that they get very few matches. After reading this post you will know.

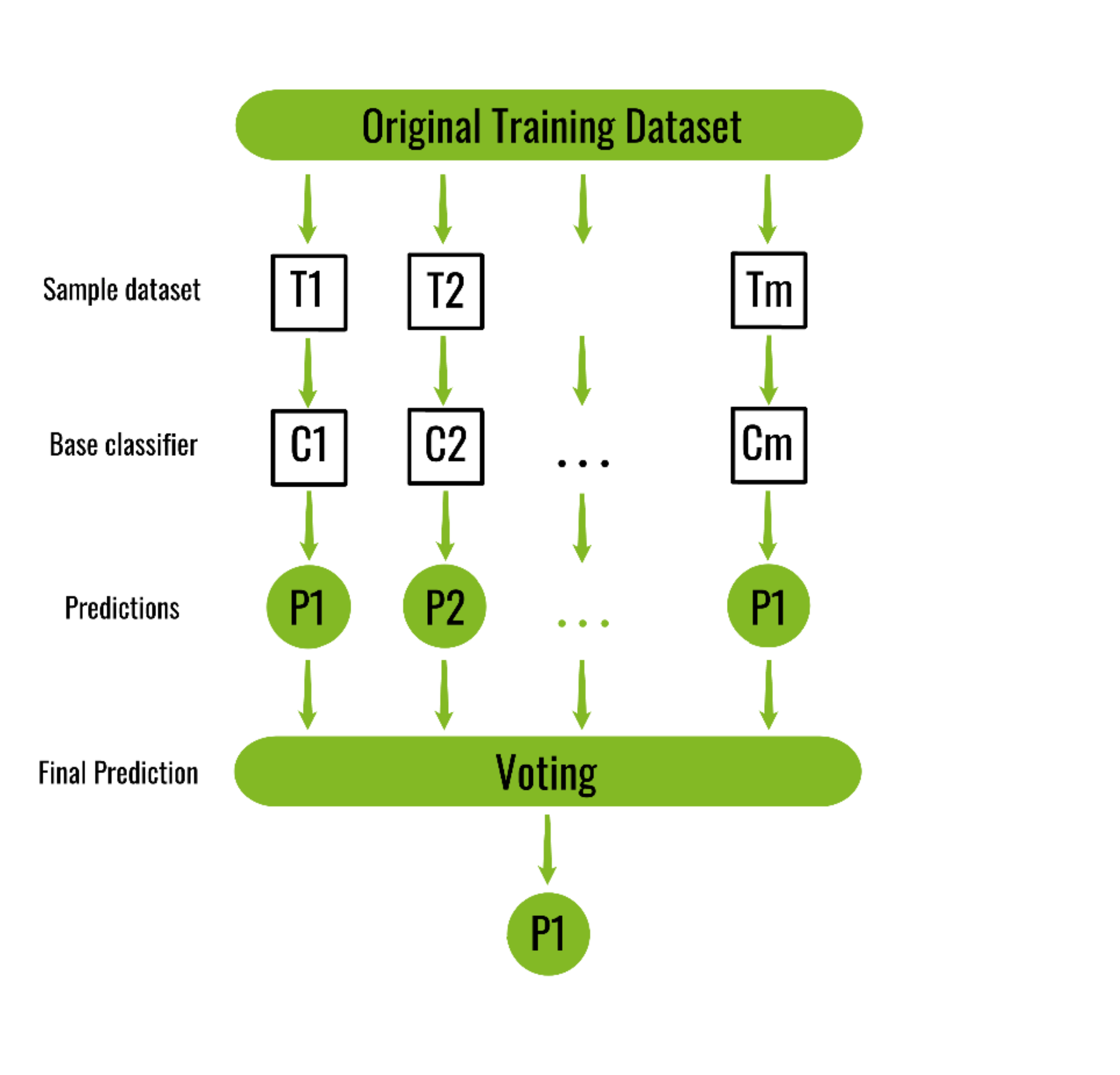

This paper focuses on the prediction of crop and calculation of its yield with the help of machine learning techniques. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any. In this post you will discover the AdaBoost Ensemble method for machine learning.

Bootstrap aggregating also called bagging is one of the first ensemble algorithms. In statistics and machine learning ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Accuracy Table of Bagging Regressor Image By Panwar Abhash Anil.

Complex problems for which there is no good solution at all using a traditional. Machine learning algorithms are powerful enough to eliminate bias from the data. In fact the easiest part of machine learning is coding.

Statistics at UC Berkeley. Unlike a statistical ensemble in statistical mechanics which is usually infinite a machine learning ensemble consists of only a concrete finite set of alternative models but. What the boosting ensemble method is and generally how it works.

Its ability to solveboth regression and classification problems along with robustness to correlated features and variable importance plot gives us enough head start to solve various problems. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions. If you are new to machine learning the random forest algorithm should be on your tips.

The key difference between Random forest and Bagging. What is a. JMLR has a commitment to rigorous yet rapid reviewing.

When we instead use bagging were no longer able to interpret or visualize an individual tree since the final bagged model is the resulting of averaging many different trees. The Journal of Machine Learning Research JMLR established in 2000 provides an international forum for the electronic and paper publication of high-quality scholarly articles in all areas of machine learningAll published papers are freely available online. Boosting is an ensemble technique that attempts to create a strong classifier from a number of weak classifiers.

Machine learning algorithms are based on math and statistics and so by definition will be unbiased. How to learn to boost decision trees using the AdaBoost algorithm. Random Forest is one of the most popular and most powerful machine learning algorithms.

In addition to developing fundamental theory and methodology we are actively involved in statistical problems that arise in such diverse fields as molecular biology geophysics astronomy AIDS research neurophysiology sociology political science education. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. Machine Learning can help humans learn To summarize Machine Learning is great for.

The performance of Random Forest is much better than Bagging regressor. Engineers can use ML models to replace complex explicitly-coded decision-making processes by providing equivalent or similar procedures learned in an automated manner from dataML offers smart. Machine Learning is a part of Data Science an area that deals with statistics algorithmics and similar scientific methods used for knowledge extraction.

Journal of Machine Learning Research. After reading this post you will know about. All human-created data is biased and data scientists need to account for that.

Difference Between Bagging and Random Forest Over the years multiple classifier systems also called ensemble systems have been a popular research topic and enjoyed growing attention within the computational intelligence and machine learning community. Problems for which existing solutions require a lot of hand-tuning or long lists of rules. There is no way to identify bias in the data.

The fundamental difference is that in Random forests only a subset of features are selected at random out of the total and the best split feature from the.

2 Bagging Machine Learning For Biostatistics

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging And Pasting In Machine Learning Data Science Python

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Machine Learning Prediction Of Superconducting Critical Temperature Through The Structural Descriptor The Journal Of Physical Chemistry C

Ensemble Learning Algorithms Jc Chouinard

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Schematic Of The Machine Learning Algorithm Used In This Study A A Download Scientific Diagram

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

An Introduction To Bagging In Machine Learning Statology

Illustrations Of A Bagging And B Boosting Ensemble Algorithms Download Scientific Diagram

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

Ml Bagging Classifier Geeksforgeeks

Ensemble Methods In Machine Learning What Are They And Why Use Them By Evan Lutins Towards Data Science

The Guide To Decision Tree Based Algorithms In Machine Learning

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium